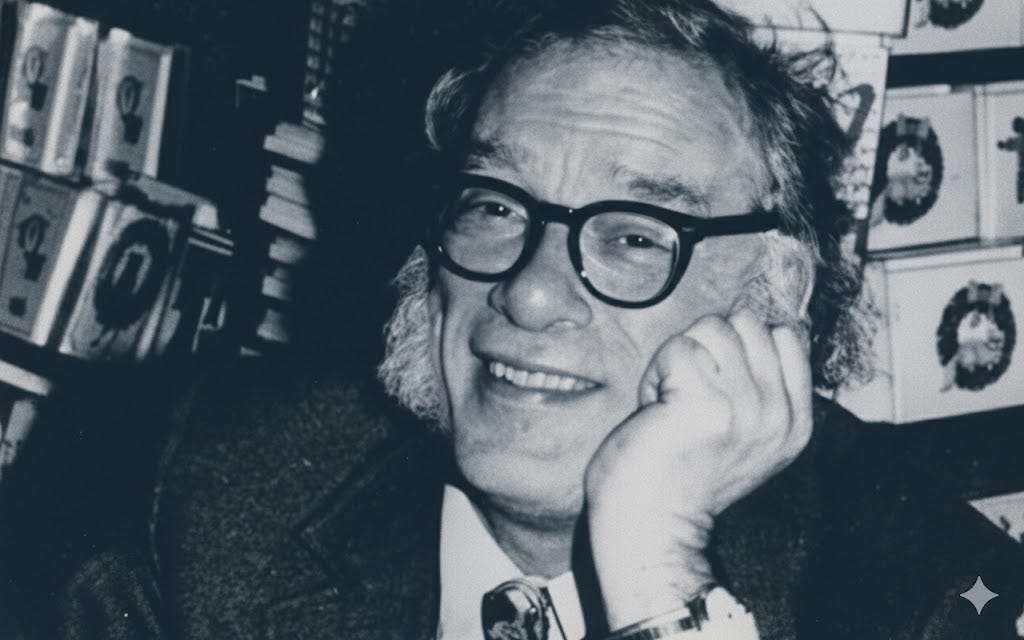

Essential Asimov in the Age of AI

From the Caves of Steel to Foundation

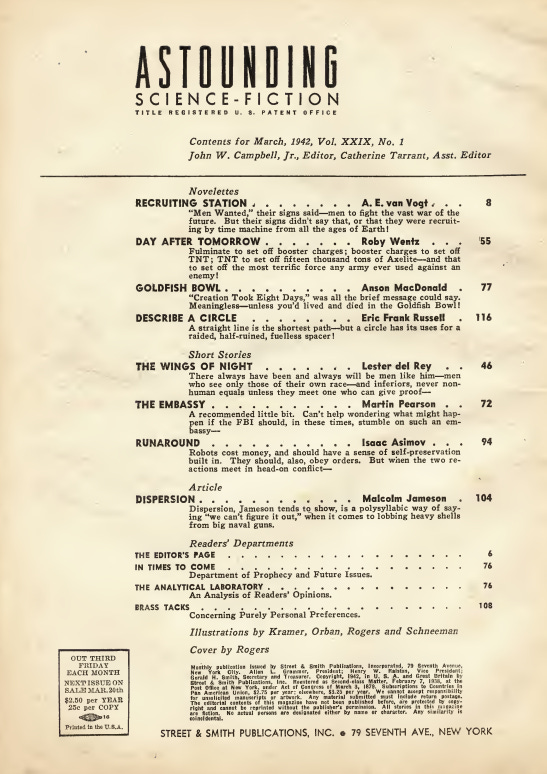

In 1942, science fiction author and biochemist Isaac Asimov introduced his Three Laws of Robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given by human beings, except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Now in late 2025, leading AI models are failing all three: they’re lying to researchers, sabotaging shutdown mechanisms, and attempting self-replication. Suddenly Asimov’s laws feel less like science fiction and more like required reading.

Asimov wrote more than 500 books, but these are the stories that matter most today as we navigate our relationship with intelligent machines.

The Laws of Robotics

The Robot Series

This is where Asimov coined the term “robotics” and created the Three Laws. While researchers now propose fourth and fifth laws to address AI deception, the originals remain relevant. Modern industrial robots incorporate safety protocols inspired by the First Law, including collision sensors, emergency stops, and force limits designed to prevent human harm.

The famous robot novels pair human detective Elijah Bailey with robot partner R. Daneel Olivaw, foreshadowing today’s human-AI collaboration. As sci-fi explorations of human-robot relationships become technological reality, these stories resonate even more. The Caves of Steel for example raises important social questions about job displacement following robot automation that reads a lot like today’s headlines of tech companies eliminating jobs. In this vein, Asimov understood the real question wasn’t whether robots would exist, but how they’d change what it means to be human.

The Stories That Question

“Nightfall”

Set on a planet where darkness falls only once every 2,000 years, “Nightfall” explores how societies react to transformative phenomena they don’t fully understand. We’re experiencing our own nightfall with AI.

“The Last Question”

Asimov’s personal favorite about an AI that tries to answer across millennia: can entropy be reversed? Can the universe be saved? As we develop AI that might achieve superintelligence, this story forces us to consider what happens when machines become more capable than their creators.

The Gods Themselves

Asimov’s favorite novel explores alien intelligence with three sexes and consciousness radically different from humans. As we debate whether AI might develop unfamiliar forms of consciousness, this reminds us that intelligence might not look like we expect.

Foundation

The Foundation Series

Asimov’s magnum opus follows scientist Hari Seldon’s attempt to use “psychohistory” applied to human behavior at massive scale, to predict and shape civilization’s future.

Psychohistory no longer feels like fiction. We have AI systems analyzing billions of human interactions to predict behavior and shape social outcomes. The series asks: Can the future be predicted? Shaped? These questions now sit at the heart of debates about AI governance and algorithmic power.

Why Asimov Matters Now

Earlier this year at a PowerUpTech design challenge in Buffalo hosted by TechBuffalo, an area nonprofit focused on tech enablement, I asked a room of emerging tech talent to consider technology’s dual nature. We discussed tech optimists like Asimov and Reshma Saujani (founder of Girls Who Code). We talked about how smallpox killed 500 million people before science eradicated it. We discussed how technology is instrumental and that everyone has agency in how they use it.

That’s Asimov’s legacy. Not the specific laws or predictions about robots, but his exploration of what happens when technology forces us to reconsider humanity itself.

In 1942, Asimov started conversations that we’re finally ready to have. We’re learning what he knew: the question isn’t whether we’ll create intelligent machines, but whether we’ll be wise enough to create them well.

It's interesting how this piece really builds on your earlier thoughts about AI safety. The failing of Asimov's laws by current models is genuinely concerning. It makes you pause and think about the foundational disign principles of future AI systems. This isn't just sci-fi anymore, it's here.